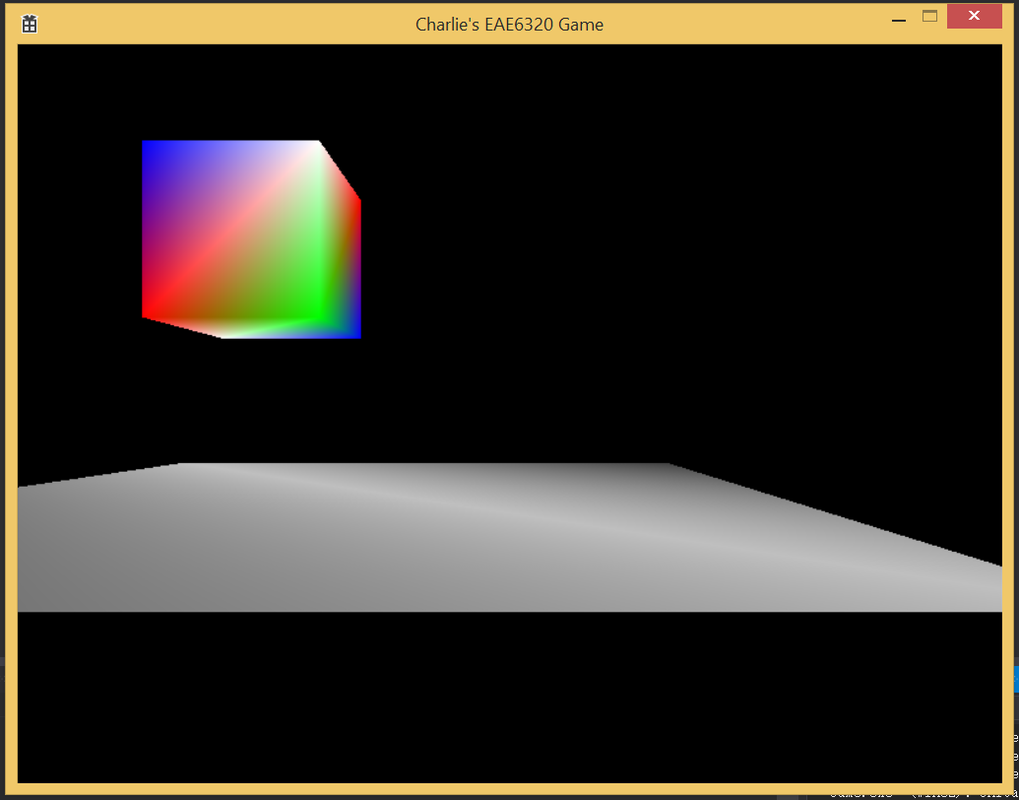

We have three transformation matrix in vertex shader. The first one transform 3d vertex from local coordinate to world coordinate. Speaking of box, we consider the local coordinate center as the center of the box. A vertex at (1, 1, 1) in its local coordinate will be first transformed to work space using local_to_world transformation matrix. World space is self-explained that that's where every object stays in. Things with same local position could have different position in world, and vice versa. Then we need to perceive the world from camera, this means we need to apply a world_to_view transform to the previous result. The default position and direction of camera is at (0, 0, 1) and looks at (0, 0, -1) direction. We apply that second transformation to get where things are from the whole view plain. Still, we need another transformation matrix to apply camera specific parameter where open angle and truncation planes come into place. After applying these three matrix, we get the picture above.

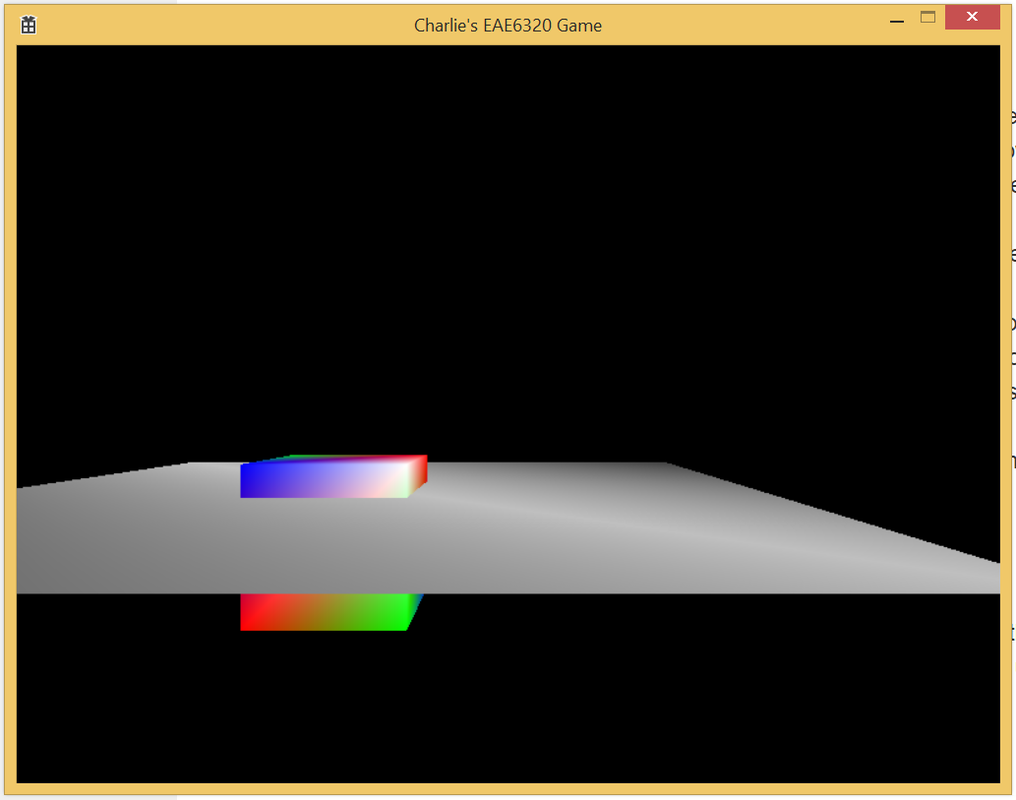

Here's a picture where two objects intersect. Our depth buffer is 16 bits which means 65536 differences. From our far plain to near plain we have 100 - 0.1 = 99.9 unit length. 99.9 / 65536 = 0.0015 is the minimum difference we can tell in depth buffer. In each frame, the depth is cleaned to be the value representing the furthest distance. OpenGl use a float between 0 to 1 here. 1 means the furthest. Then we start to draw the first object. When drawing a pixel for the first object, the first object that could be seen is definitely nearer to the screen than the furthest truncation plain. So we draw its color in color buffer, also update its depth in depth buffer. When we're drawing the second object, depth test still needs to be done with current pixel depth value. If it's not nearer to the observer, we can tell it's hidden by previous drawing pixel so we don't need to draw that. We use less or equal to as depth function because for effects like bump map, we'll process the same point for several times, the default less function will truncate later process after the first write.

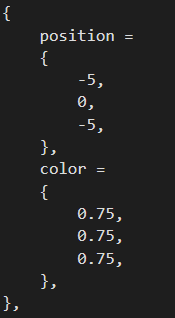

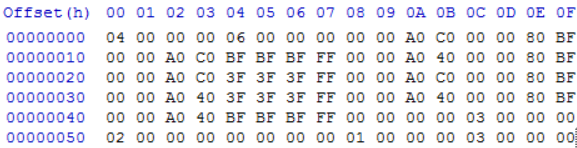

These above are my .lua and .binary version of floor mesh. I just added the z value after x and y. The change could be observed in binary file that after two counts, every vertex has 3 x 4 byte position and 4 x 1 byte color, followed by 2 x 3 index at last.

For the camera, it's a derived class from component class. It keeps a list of cameras for future cull rendering purpose. It's in parallel hierarchy as Renderable class. To create a camera object. First create a gameobject with position, then add camera component to it, then as assignment requirement, add controller component to it. The first camera created is automatically set as main camera which could be accessed as public unless set otherwise. Culling layers will be added for Renderable and Camera later for a more versatile functionality.

Here's the executable:

For the camera, it's a derived class from component class. It keeps a list of cameras for future cull rendering purpose. It's in parallel hierarchy as Renderable class. To create a camera object. First create a gameobject with position, then add camera component to it, then as assignment requirement, add controller component to it. The first camera created is automatically set as main camera which could be accessed as public unless set otherwise. Culling layers will be added for Renderable and Camera later for a more versatile functionality.

Here's the executable:

Current control is asdw for box xy, rf for box z, jkli for camera xz movement.

| assignment10.zip |

RSS Feed

RSS Feed