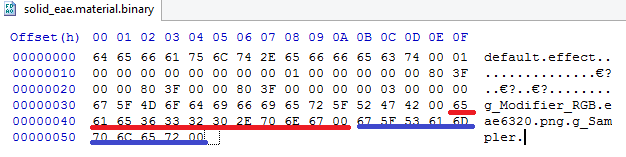

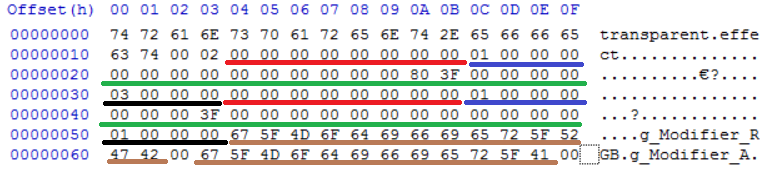

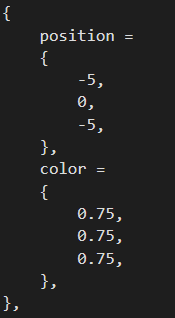

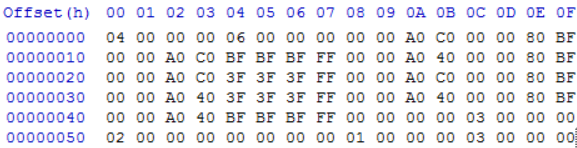

above is the material binary file with solid eae6320 texture. After array of uniform data, comes array of texture data, which contains pairs of texture path (in red) and sampler uniform name (in blue). These will be extracted to make temp string vectors in load time.

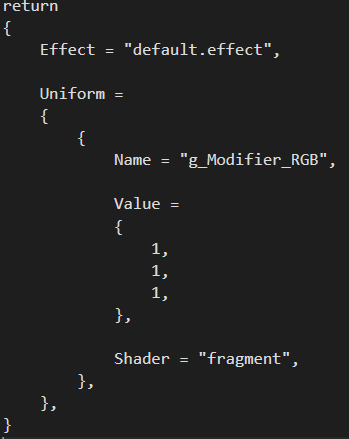

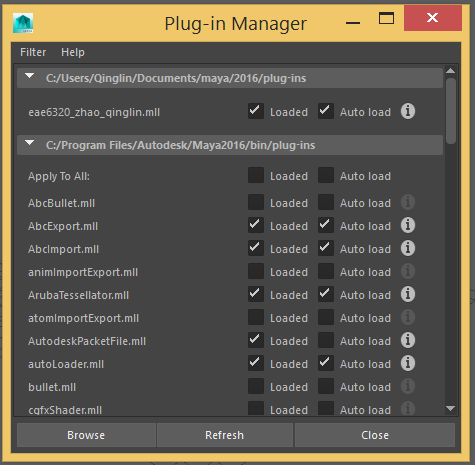

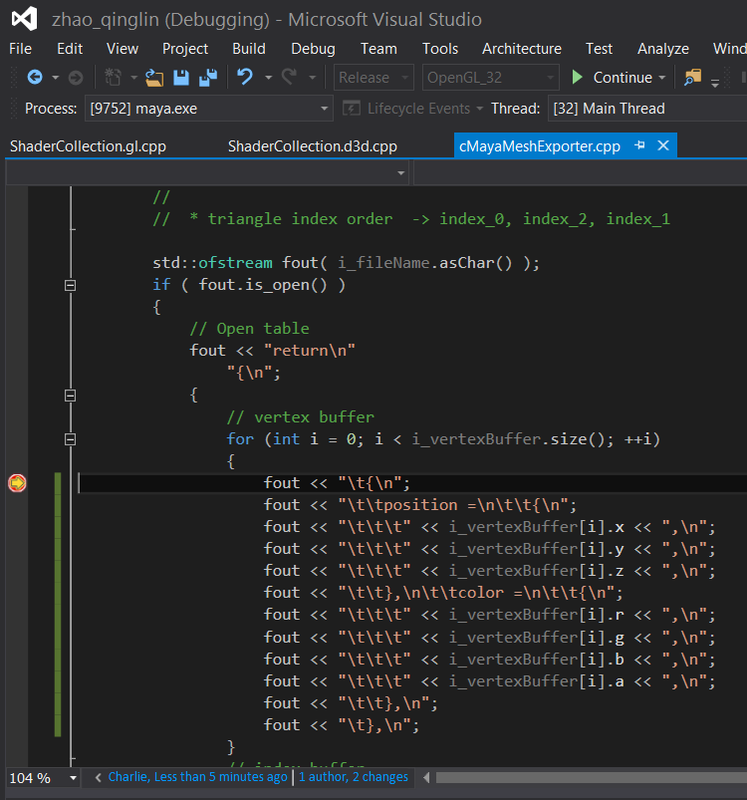

I choose to create another texture2d class just to be more structured. At graphics initialization, a pointer to d3d device is copied in initialize() method where gl implementation leaves it blank. At load time, texture data and sampler handle will be set in material ctor. At run time, texture is applied in setting material uniform function in material class by calling Texture2D::SetMaterial(size_t unit) where unit is unused by d3d implementation. Material class stores vector of textures of current material.

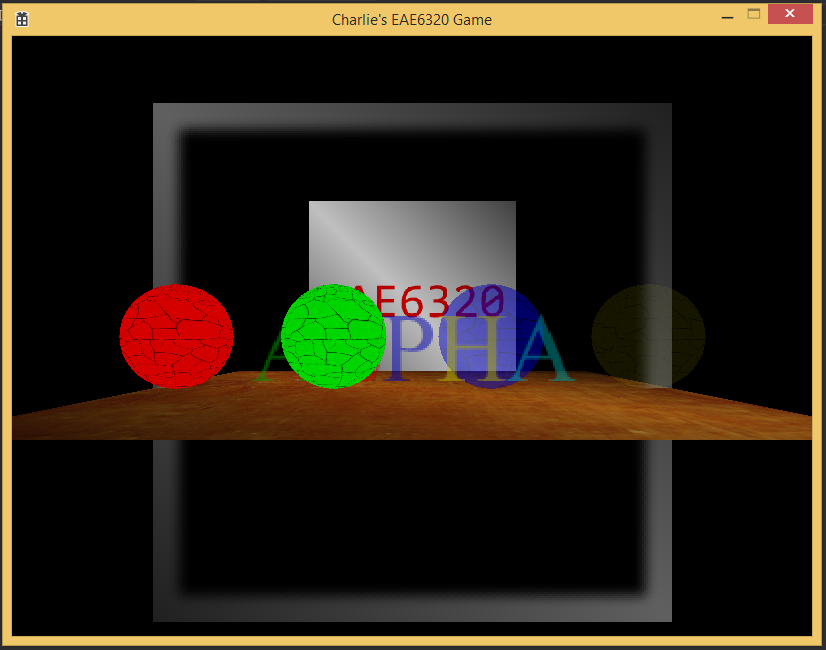

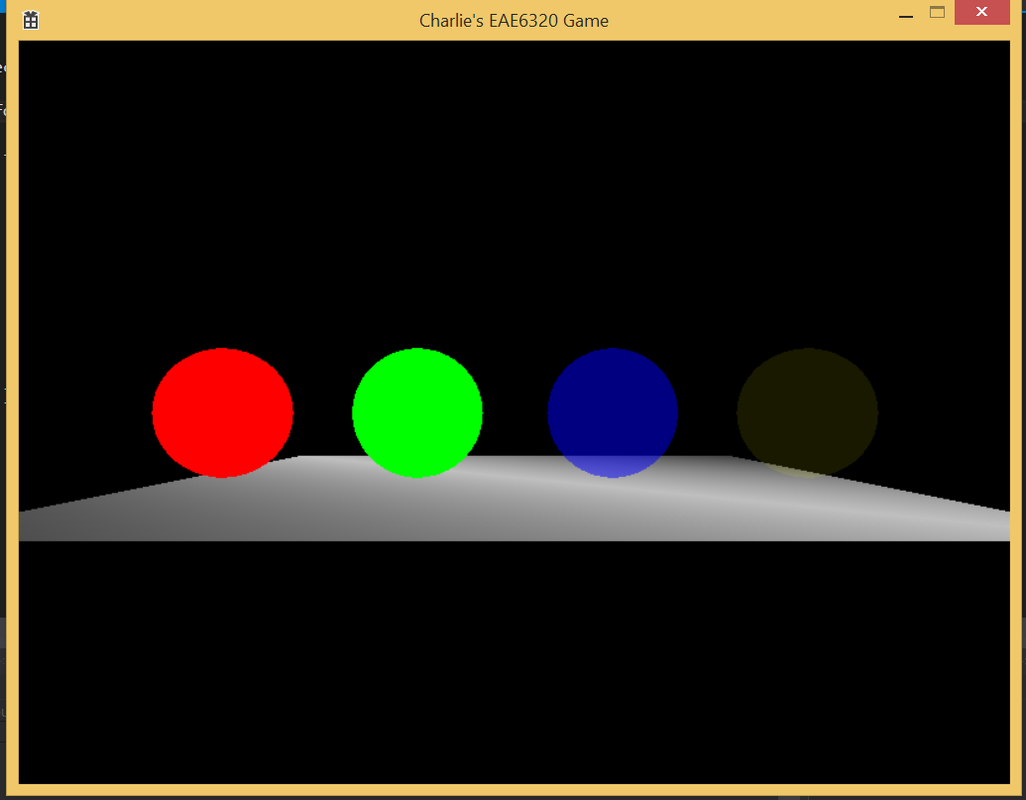

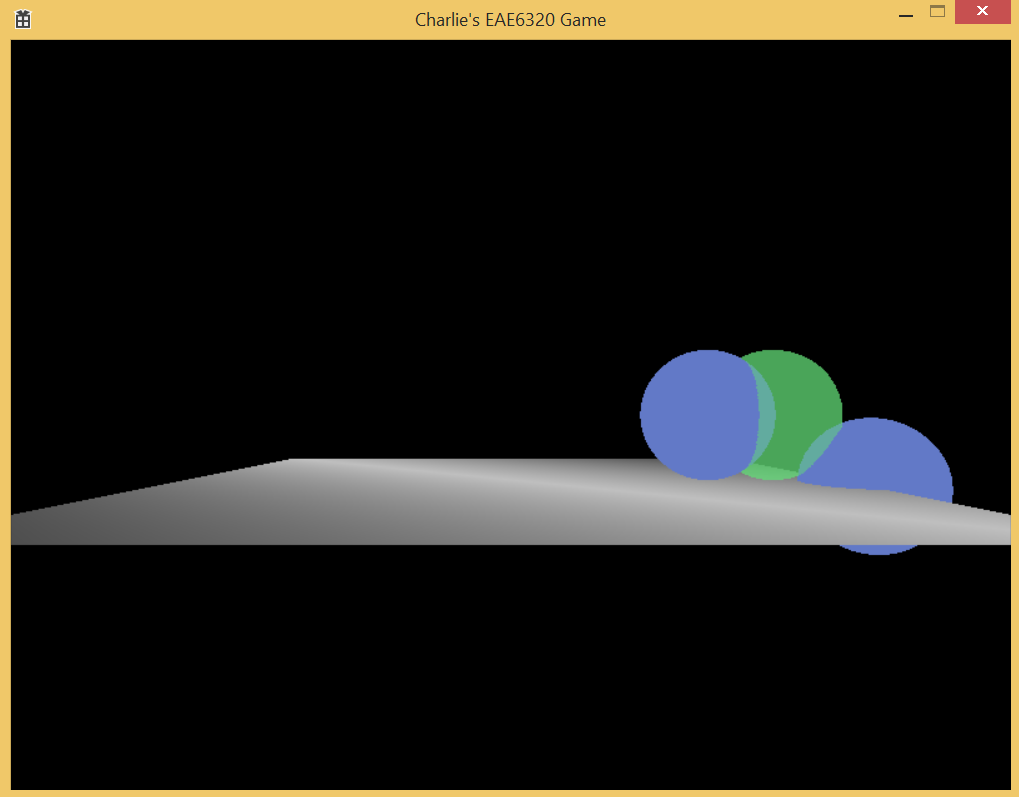

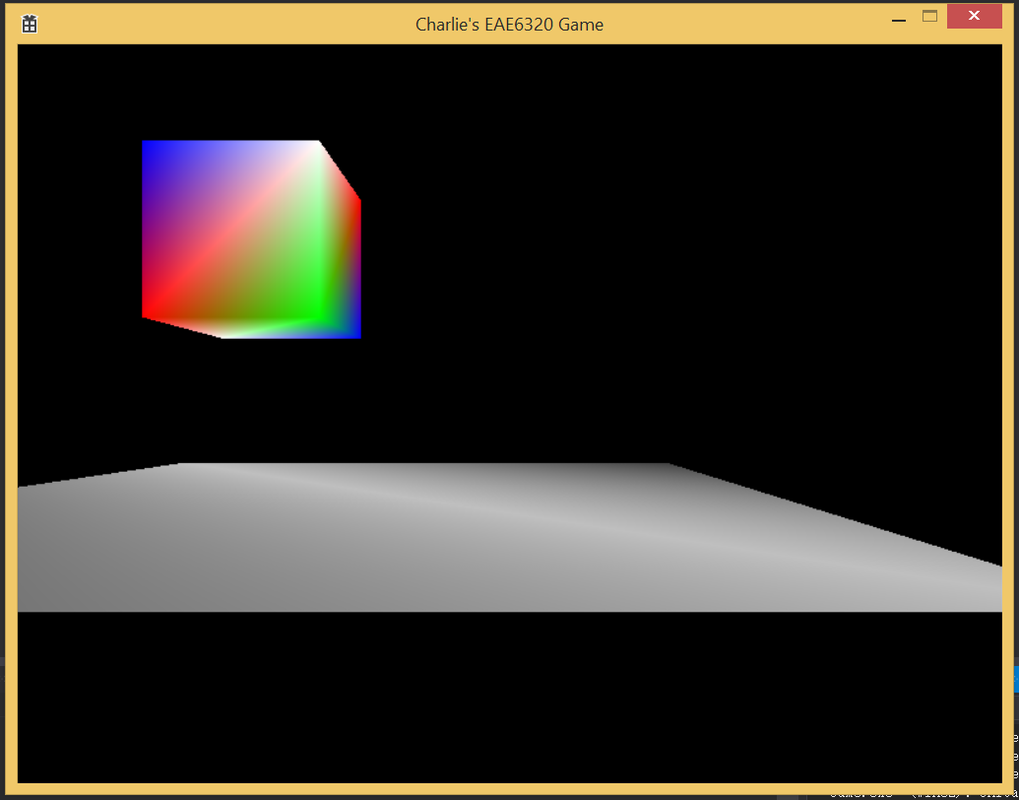

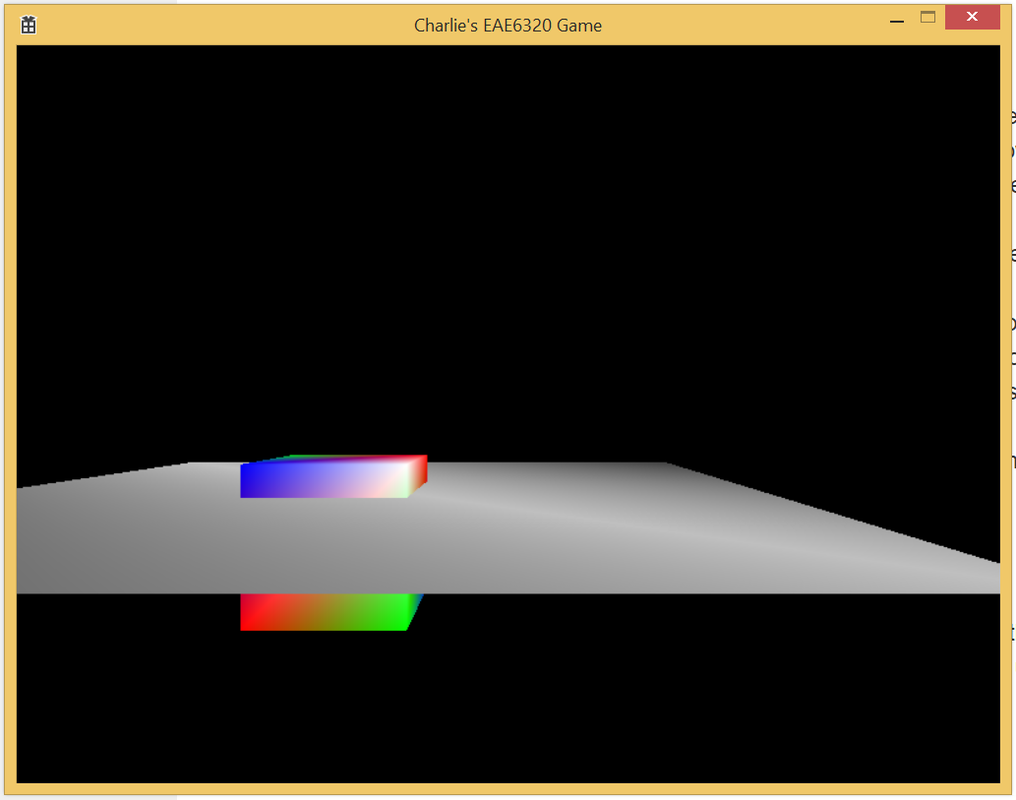

The following two figures are separately my d3d and gl screenshot:

I choose to create another texture2d class just to be more structured. At graphics initialization, a pointer to d3d device is copied in initialize() method where gl implementation leaves it blank. At load time, texture data and sampler handle will be set in material ctor. At run time, texture is applied in setting material uniform function in material class by calling Texture2D::SetMaterial(size_t unit) where unit is unused by d3d implementation. Material class stores vector of textures of current material.

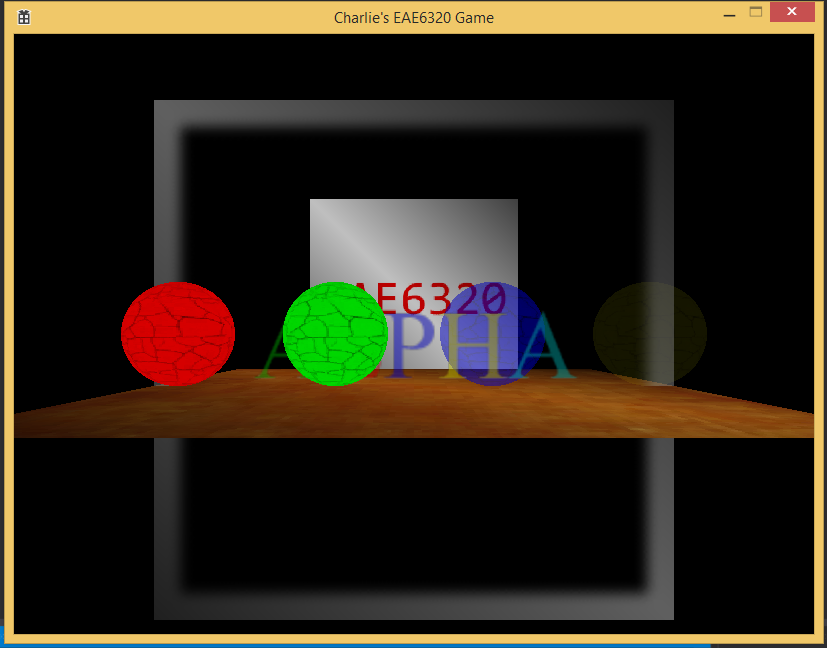

The following two figures are separately my d3d and gl screenshot:

As JP informed, gl implementation looks more "blurry" than d3d version. I'll add scaling in my next assignment also.

Here's my executable

Here's my executable

| assignment13.zip |

RSS Feed

RSS Feed