The straight benefit for using materials is that a lot of objects share the same effect while having different appearance. Take iron table and wood table as example, they share the same rendering setting such as same shader, depth test settings, while they use different color or texture during rendering to show themselves as iron or wood. So we can still keep the old way that effect may not need to be changed while rendering several objects.

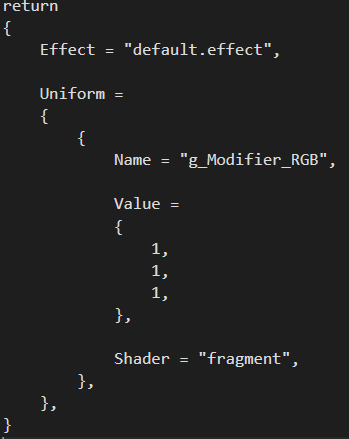

Here's a screenshot of my human-readable material file:

Here's a screenshot of my human-readable material file:

Effect path is extracted for reading effect binary at run time. Then there's a Uniform block which contains arrays of uniforms. In each block, uniform name is used to set handle at run time. Then there comes the value block which contains 1 to 4 floats to meet different vector size need in shader. Then shader type is specified for Direct3D to specify which shader should we get const table and then get handle from.

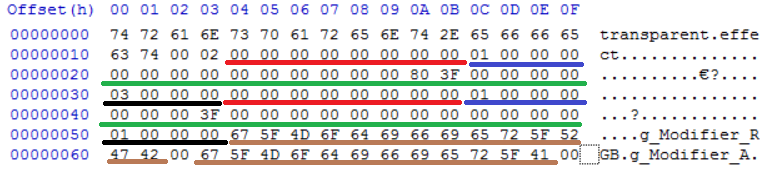

The following is a screenshot for my direct3d transparent blue material:

The following is a screenshot for my direct3d transparent blue material:

As mentioned above, effect path comes first. Then comes the count of uniforms, which is optional. Then comes array of uniforms. The red line part is the uniform handle, which is a 64 bit nullptr as default. Then the blue part shows the shader type, in my case vertex is 0 while fragment is 1. The number of values we need for a uniform is flexible but i choose to hold it in a fixed size container (4 floats). The count is needed to know how many values is actually useful in run time. The green part contains 4 float values. Then comes the count of values shown in black part. There's no particular reason for choosing this sequence. Then after the array of uniforms, there comes the name array for uniforms shown in brown. This is used to set handle at load time.

Different platform has different binary material because type for handle is different and they have different default values. It's the same between configurations in my case though.

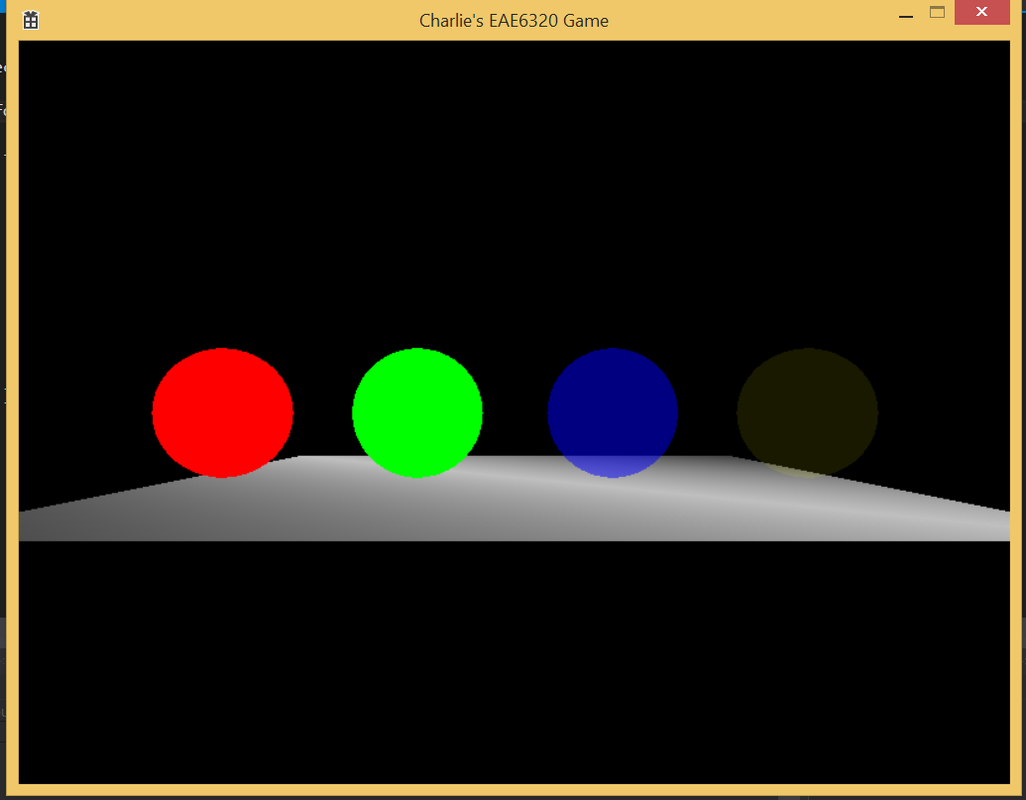

Here's a screenshot of my scene:

Different platform has different binary material because type for handle is different and they have different default values. It's the same between configurations in my case though.

Here's a screenshot of my scene:

Same mesh is used for all four spheres. The mesh vertex color is white. The red sphere has is solid material with rgb (1, 0, 0) so only red passes the filtering. Green is similar just with rgb (0, 1, 0). These two materials use default.effect which disables alpha blending. As for blue and yellow shperes, they have separate rgb of (0, 0, 1) and (1, 1, 0). Blue material has an opacity of 0.5 while yellow has it as 0.1. In their shared effect, alpha blending is enabled and also exists fragment shader uniform for setting alpha independently.

Here's my executable:

Here's my executable:

| assignment12.zip |

RSS Feed

RSS Feed