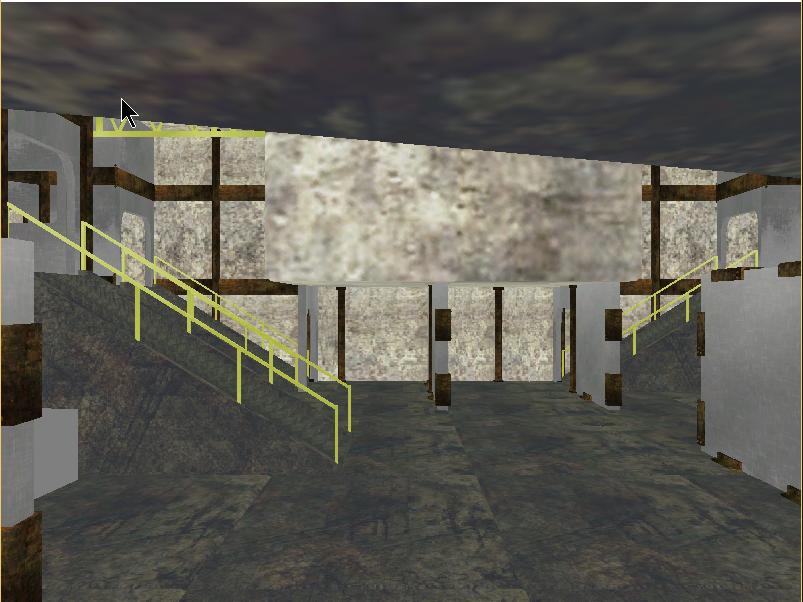

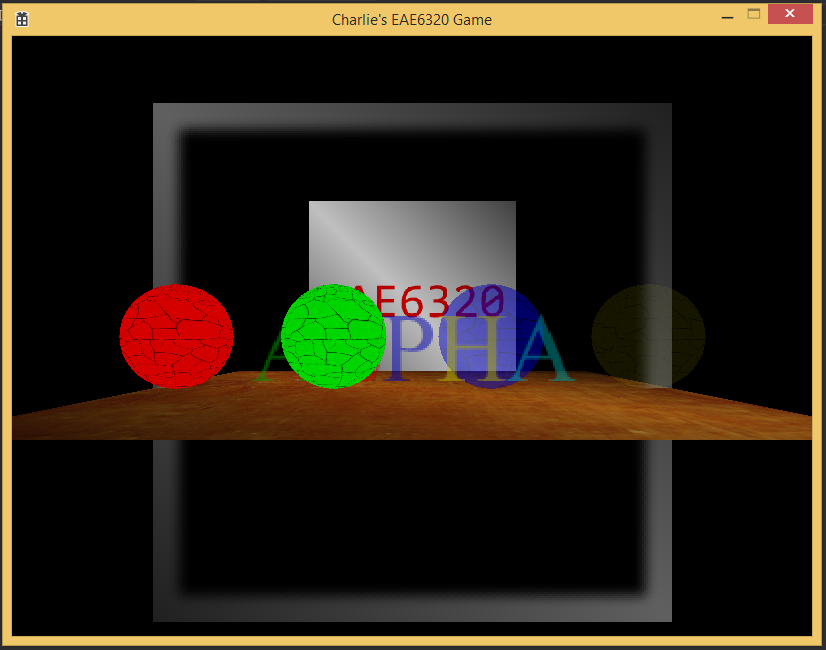

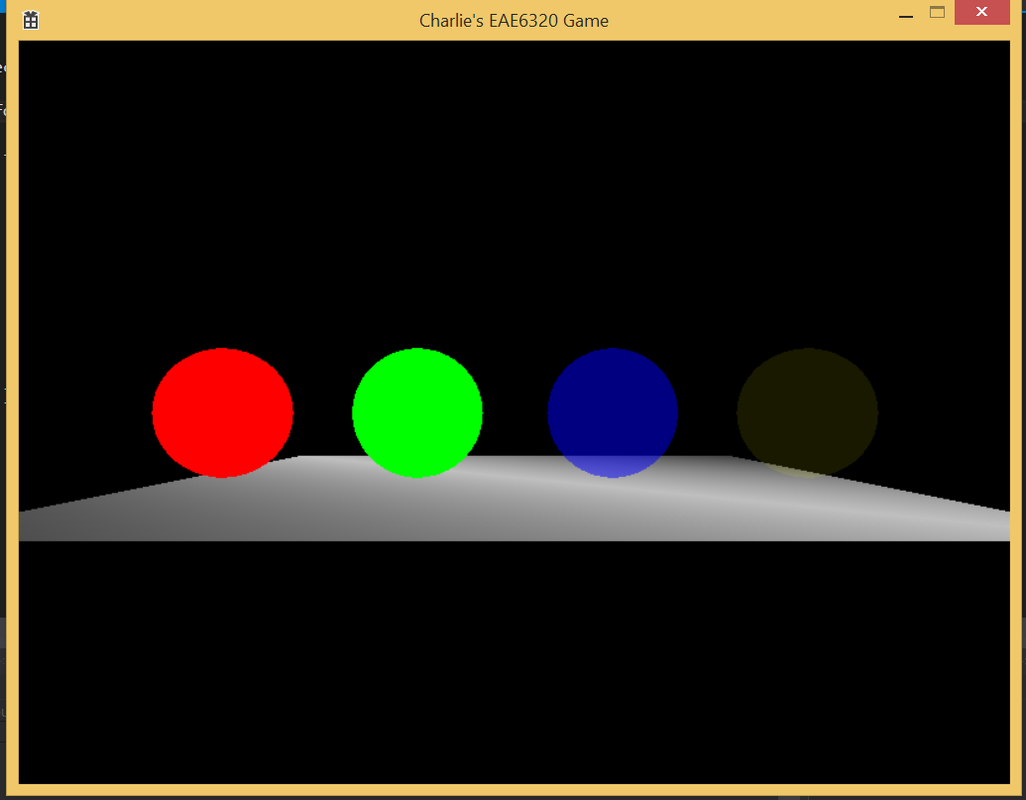

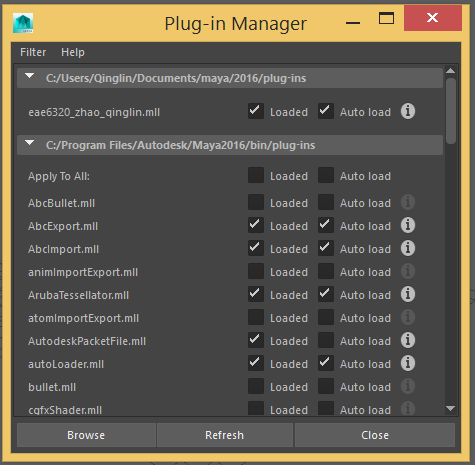

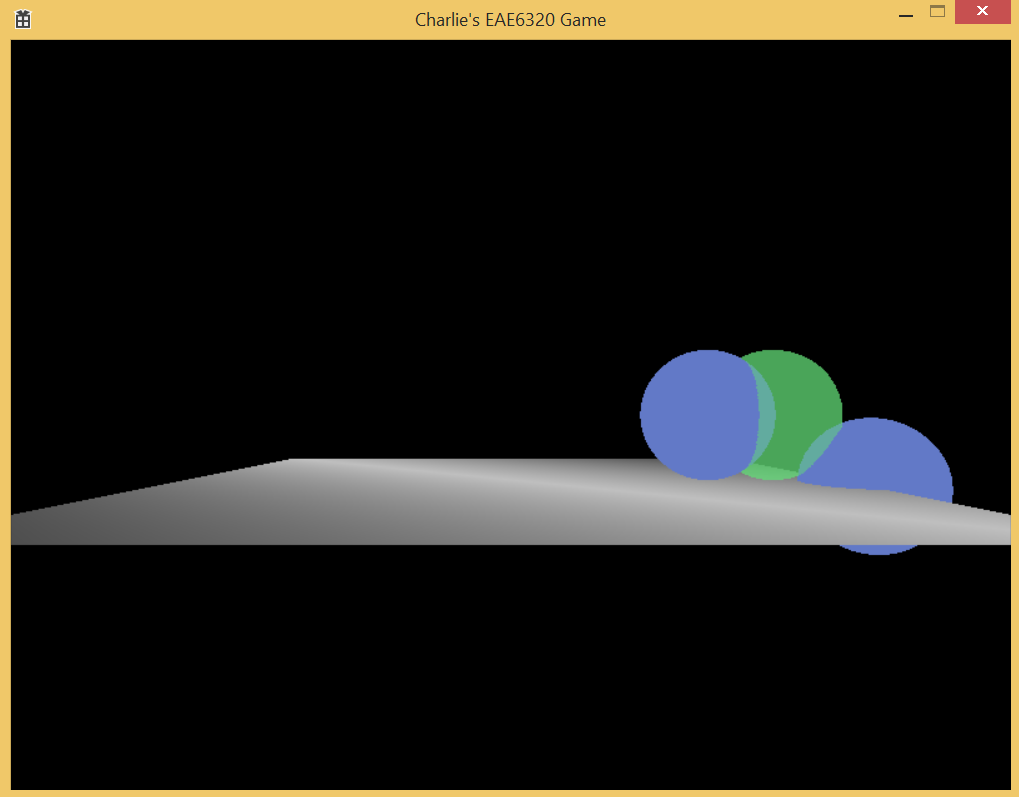

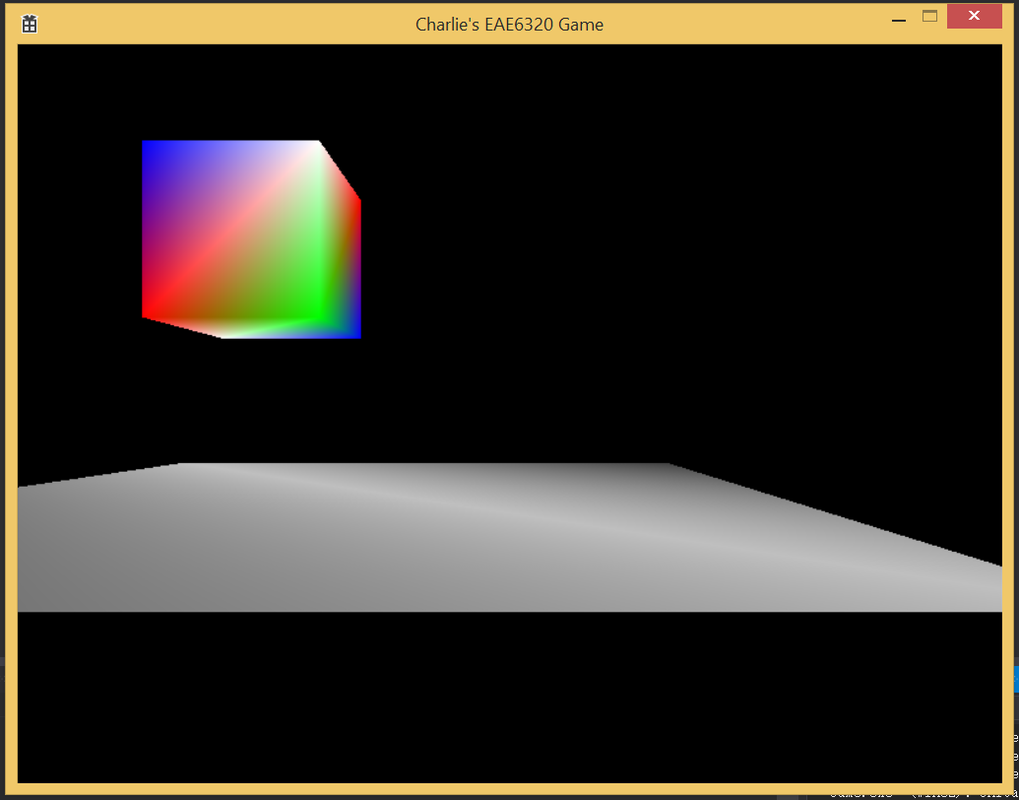

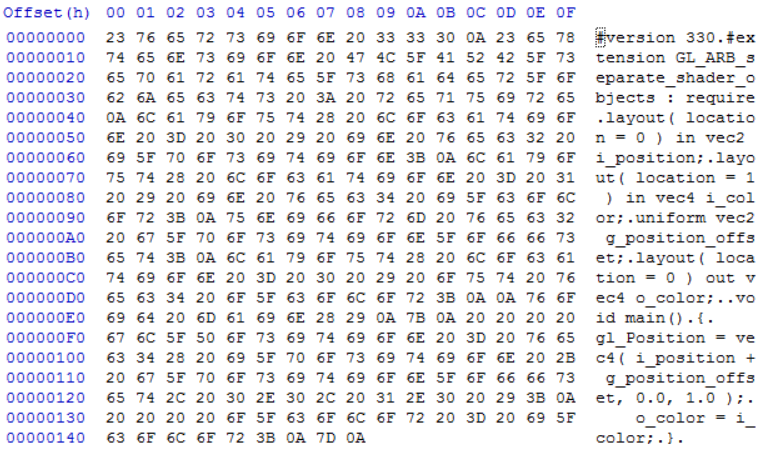

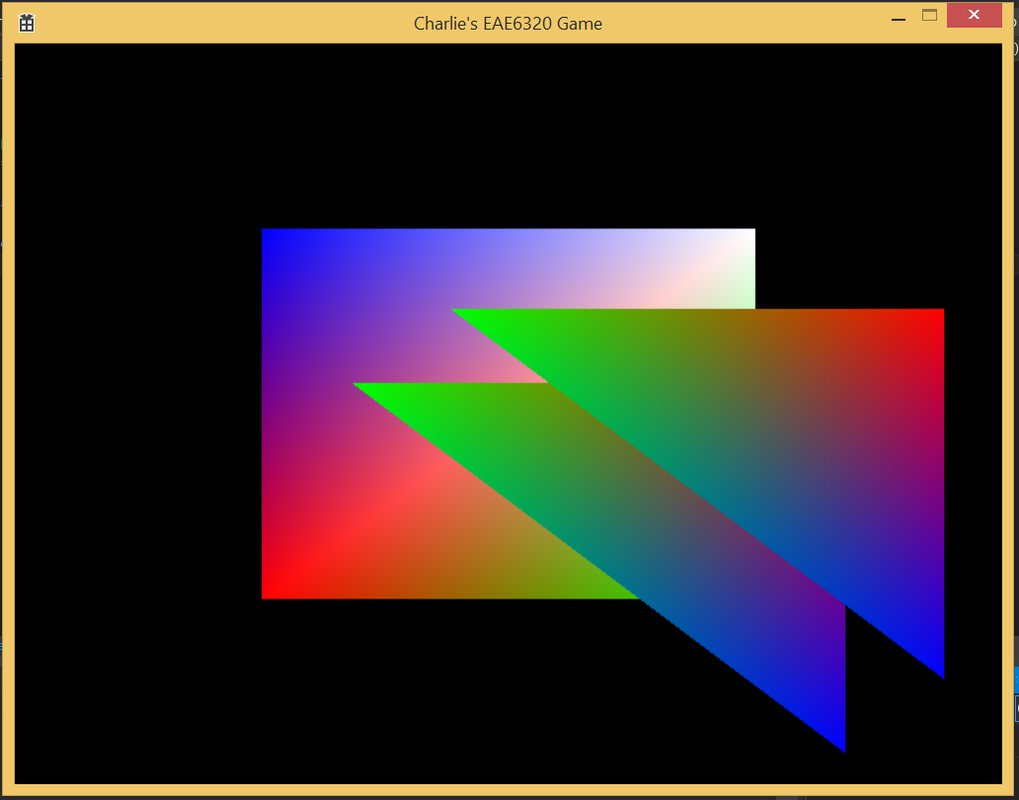

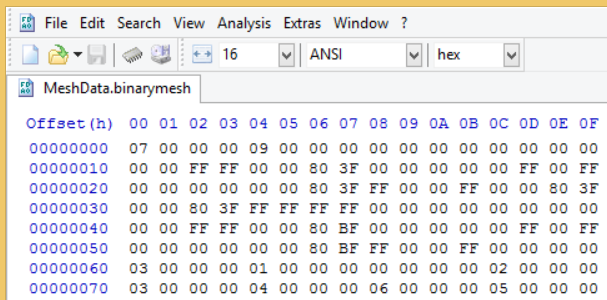

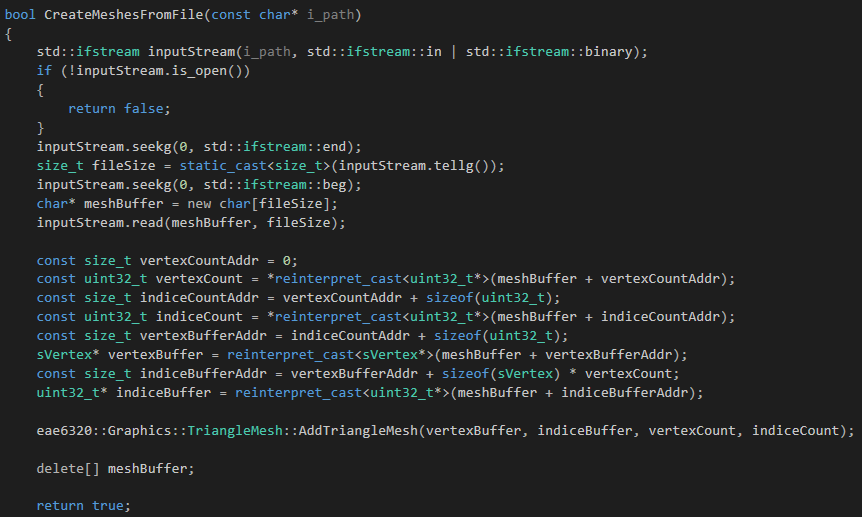

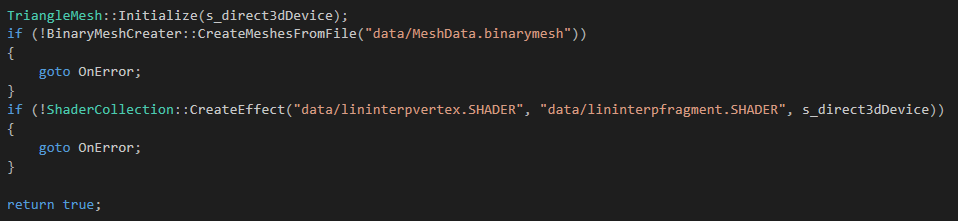

For the new semester, i continue to hone my engine. The first assignment is to load a scene made in Maya as the background of the game. The following is the pic after accomplishing:

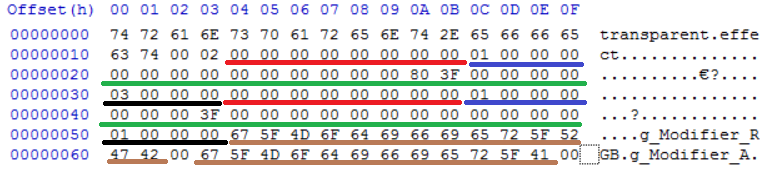

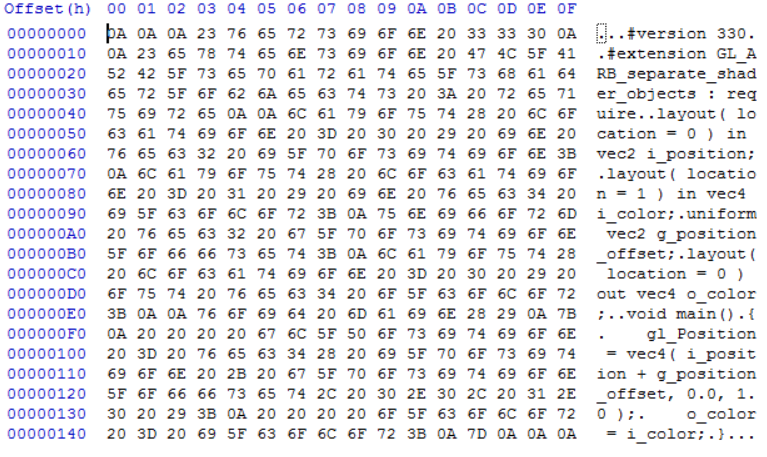

The second assignment is to create drawing debug primitive mechanism for the engine. As I have both D3D and OpenGL rendering in different platform settings, I choose to add it to OpenGL code first.

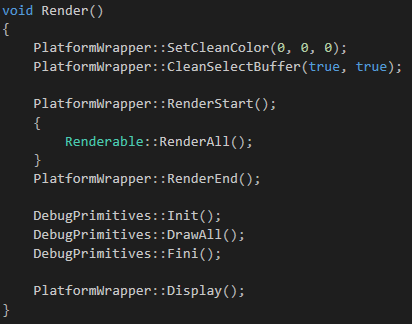

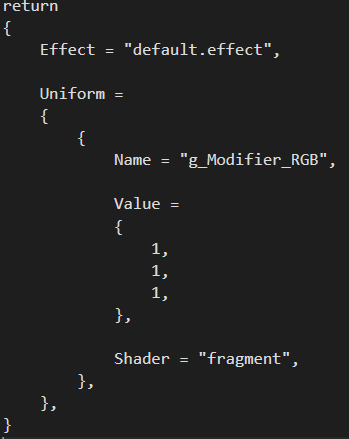

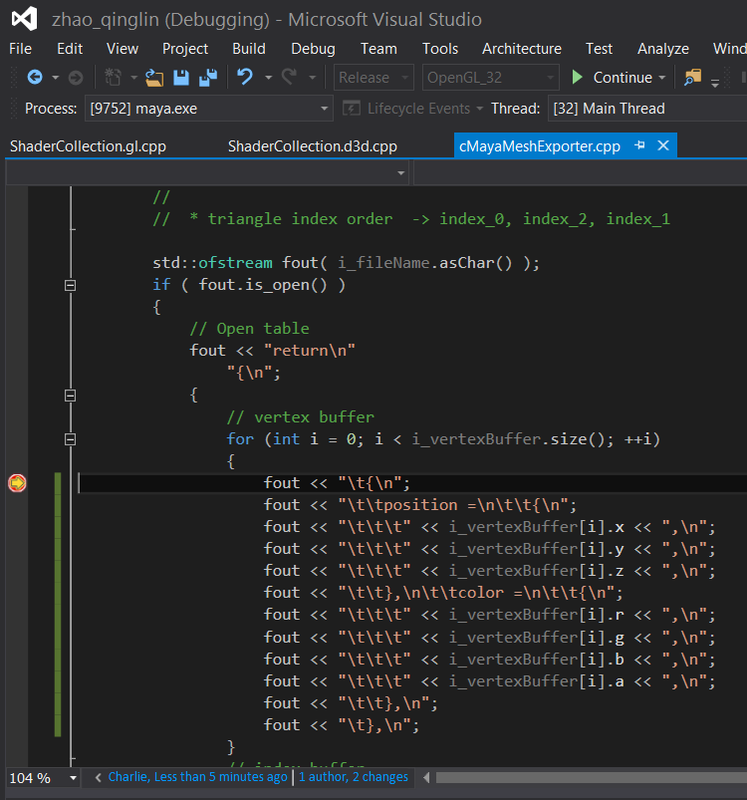

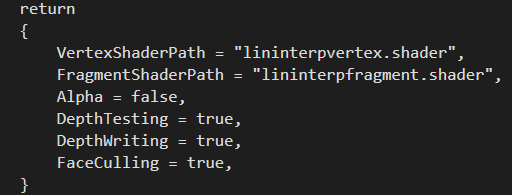

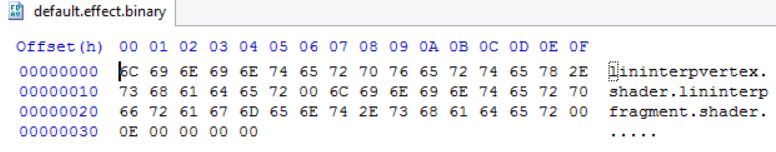

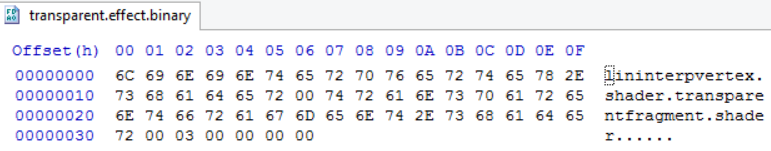

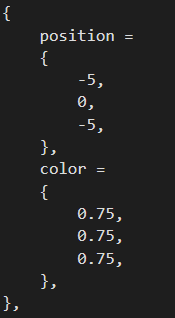

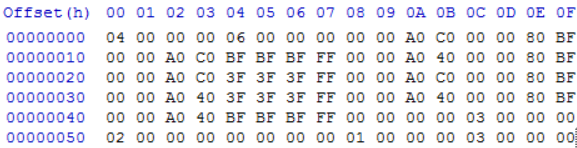

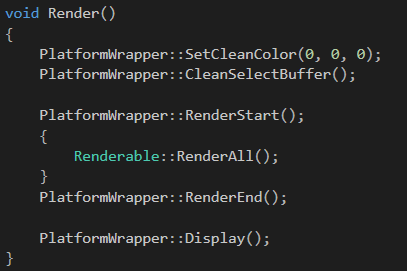

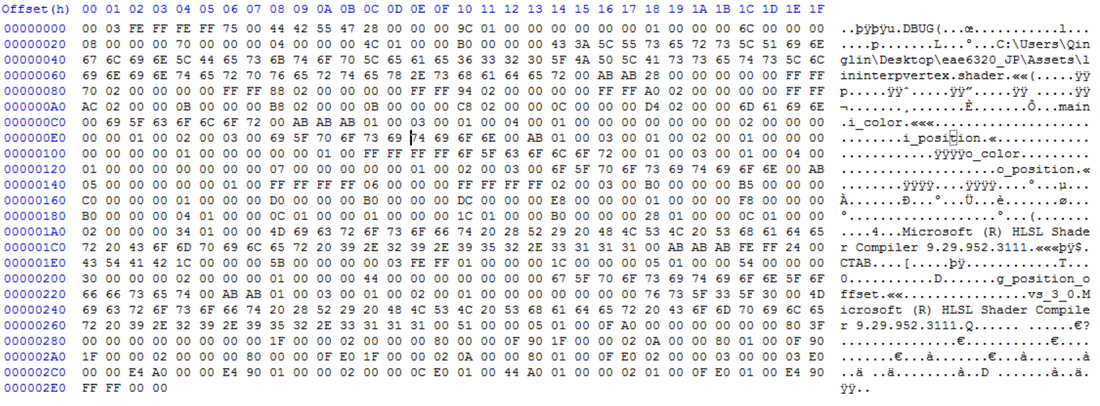

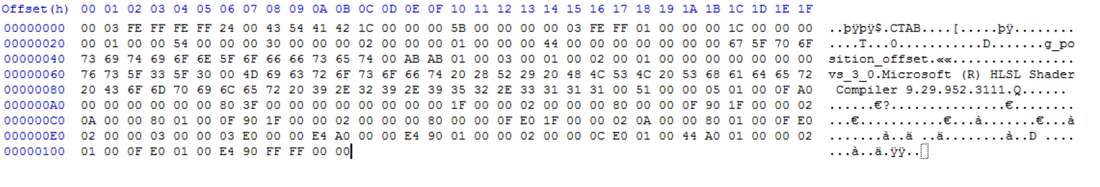

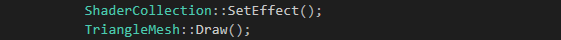

Considering debug primitives will be disabled in release build, I decide to use render list to draw primitives. Then consider the functionality requirement that this is supposed to be used easily, I decide to use a header declaration with drawXXX() methods, which will add primitive object to a render list which will be deleted once after that object being rendered. So then i need initialize, render and cleanup methods besides drawXXX() methods. That's all about interface. Due to different characteristics of various primitives, various primitive classes are derived from Primitive base class, in which a virtual method draw() and color member variable are located. During run time, user of debug primitives just need to include DebugPrimitives header which contains interface. The draw method add Primitive reference to the debug primitive render list which will be called after vertex array objects (VAO) are rendered. The following is the structure for of main render function:

Considering debug primitives will be disabled in release build, I decide to use render list to draw primitives. Then consider the functionality requirement that this is supposed to be used easily, I decide to use a header declaration with drawXXX() methods, which will add primitive object to a render list which will be deleted once after that object being rendered. So then i need initialize, render and cleanup methods besides drawXXX() methods. That's all about interface. Due to different characteristics of various primitives, various primitive classes are derived from Primitive base class, in which a virtual method draw() and color member variable are located. During run time, user of debug primitives just need to include DebugPrimitives header which contains interface. The draw method add Primitive reference to the debug primitive render list which will be called after vertex array objects (VAO) are rendered. The following is the structure for of main render function:

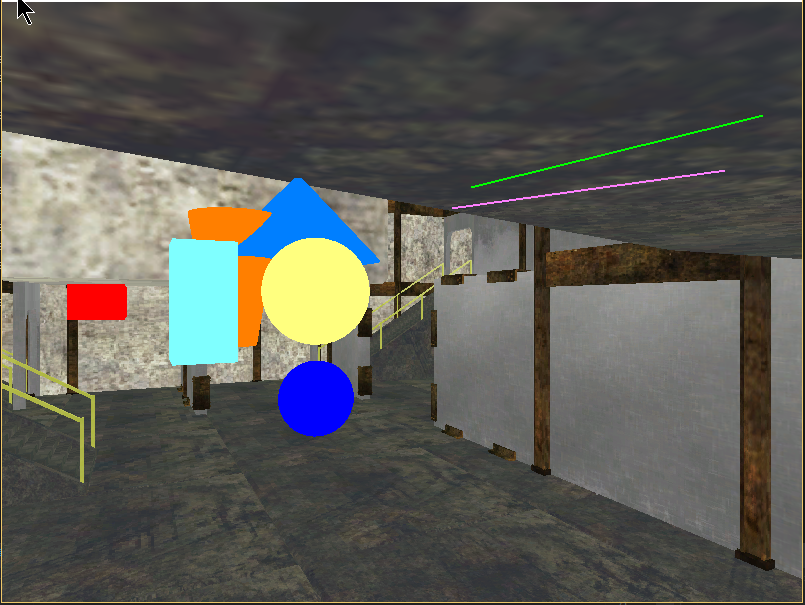

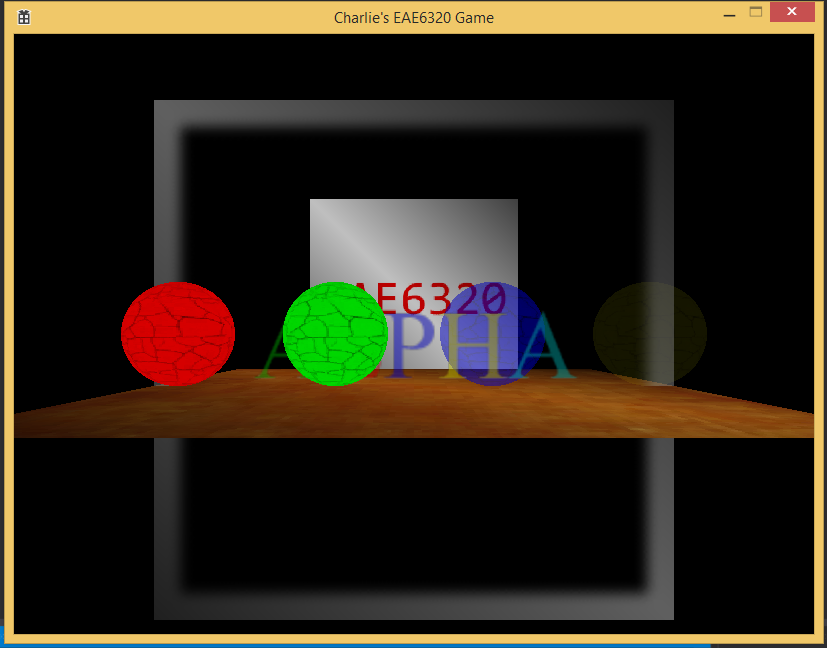

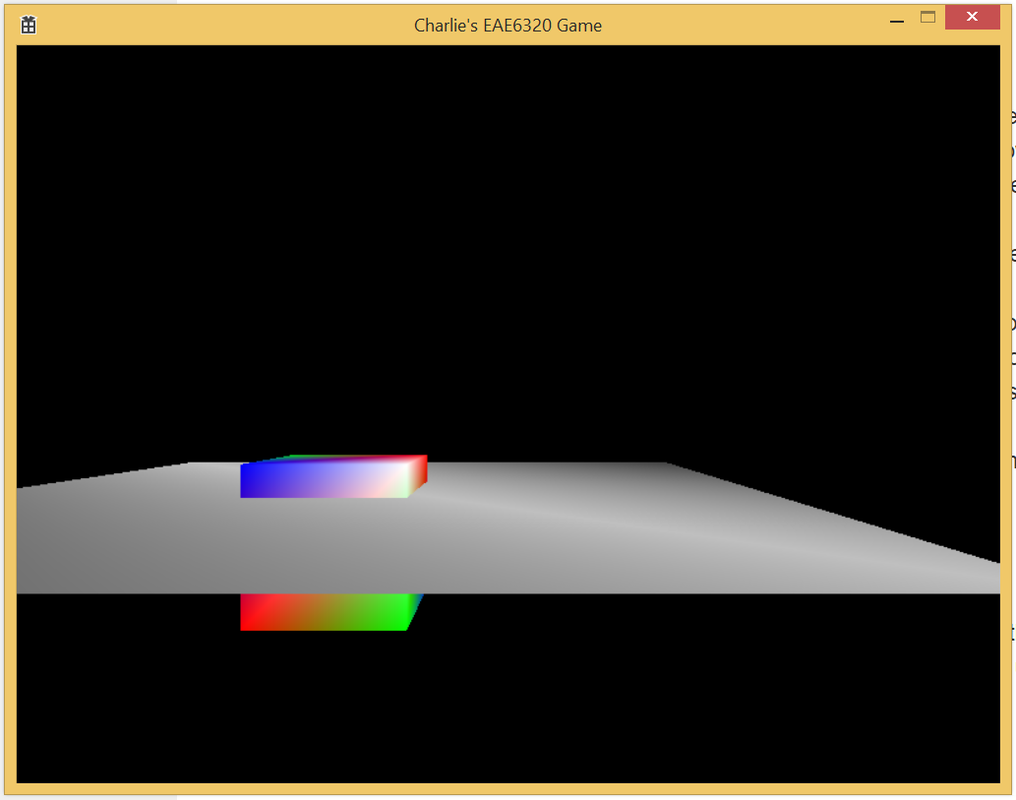

The following is an effect figure after line, cube, sphere and cylinder primitives added to the screen:

Here's the executable files which includes a release build where debug primitives could not be observed and also a debug build in which button [p] can turn current primitives on/off. Besides, you could traverse the scene using button [a] & [d] for rotation around vertical direction, [w] & [s] for translation along vertical direction, [j] & [l] for parallel movement, [i] & [k] for moving forward and backward.

| assignment02.zip |

RSS Feed

RSS Feed